Keras 2 : examples : DeepLabV3+ を使用した多クラス・セマンティック・セグメンテーション (翻訳/解説)

翻訳 : (株)クラスキャット セールスインフォメーション

作成日時 : 11/17/2021 (keras 2.7.0)

* 本ページは、Keras の以下のドキュメントを翻訳した上で適宜、補足説明したものです:

- Code examples : Computer Vision : Multiclass semantic segmentation using DeepLabV3+ (Author: Soumik Rakshit)

* サンプルコードの動作確認はしておりますが、必要な場合には適宜、追加改変しています。

* ご自由にリンクを張って頂いてかまいませんが、sales-info@classcat.com までご一報いただけると嬉しいです。

- 人工知能研究開発支援

- 人工知能研修サービス(経営者層向けオンサイト研修)

- テクニカルコンサルティングサービス

- 実証実験(プロトタイプ構築)

- アプリケーションへの実装

- 人工知能研修サービス

- PoC(概念実証)を失敗させないための支援

- テレワーク & オンライン授業を支援

- お住まいの地域に関係なく Web ブラウザからご参加頂けます。事前登録 が必要ですのでご注意ください。

- ウェビナー運用には弊社製品「ClassCat® Webinar」を利用しています。

◆ お問合せ : 本件に関するお問い合わせ先は下記までお願いいたします。

- 株式会社クラスキャット セールス・マーケティング本部 セールス・インフォメーション

- E-Mail:sales-info@classcat.com ; WebSite: www.classcat.com ; Facebook

Keras 2 : examples : DeepLabV3+ を使用した多クラス・セマンティック・セグメンテーション

Description: 多クラス・セマンティック・セグメンテーションのための DeepLabV3+ アーキテクチャを実装します。

イントロダクション

画像の総てのピクセルに意味的なラベルを割り当てることを目的とした、セマンティック・セグメンテーションは必要不可欠なコンピュータ・ビジョンのタスクです。この例では、多クラス・セマンティック・セグメンテーションのために DeepLabV3+ モデルを実装します、これはセマンティック・セグメンテーション・ベンチマークを上手く遂行する完全畳み込みアーキテクチャです。

リファレンス

- Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation

- Rethinking Atrous Convolution for Semantic Image Segmentation

- DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs

データのダウンロード

モデルを訓練するために Crowd Instance-level Human Parsing データセット を使用します。Crowd Instance-level Human Parsing (CIHP) データセットは 38,280 の多様な人物の画像を持ちます。CIHP の各画像はインスタンス・レベルの識別に加えて、20 カテゴリーに対してピクセル wise なアノテーションでラベル付けされています。データセットは「人物パートのセグメンテーション」タスクのために利用できます。

import os

import cv2

import numpy as np

from glob import glob

from scipy.io import loadmat

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

!gdown https://drive.google.com/uc?id=1B9A9UCJYMwTL4oBEo4RZfbMZMaZhKJaz

!unzip -q instance-level-human-parsing.zip

Downloading... From: https://drive.google.com/uc?id=1B9A9UCJYMwTL4oBEo4RZfbMZMaZhKJaz To: /content/keras-io/scripts/tmp_4374681/instance-level-human-parsing.zip 2.91GB [00:36, 79.6MB/s]

TensorFlow データセットの作成

38,280 画像を持つ CIHP データセット全体で訓練するには非常に多くの時間がかかりますので、このサンプルではモデルを訓練するために 200 画像の小さいサブセットを使用していきます。

IMAGE_SIZE = 512

BATCH_SIZE = 4

NUM_CLASSES = 20

DATA_DIR = "./instance-level_human_parsing/instance-level_human_parsing/Training"

NUM_TRAIN_IMAGES = 1000

NUM_VAL_IMAGES = 50

train_images = sorted(glob(os.path.join(DATA_DIR, "Images/*")))[:NUM_TRAIN_IMAGES]

train_masks = sorted(glob(os.path.join(DATA_DIR, "Category_ids/*")))[:NUM_TRAIN_IMAGES]

val_images = sorted(glob(os.path.join(DATA_DIR, "Images/*")))[

NUM_TRAIN_IMAGES : NUM_VAL_IMAGES + NUM_TRAIN_IMAGES

]

val_masks = sorted(glob(os.path.join(DATA_DIR, "Category_ids/*")))[

NUM_TRAIN_IMAGES : NUM_VAL_IMAGES + NUM_TRAIN_IMAGES

]

def read_image(image_path, mask=False):

image = tf.io.read_file(image_path)

if mask:

image = tf.image.decode_png(image, channels=1)

image.set_shape([None, None, 1])

image = tf.image.resize(images=image, size=[IMAGE_SIZE, IMAGE_SIZE])

else:

image = tf.image.decode_png(image, channels=3)

image.set_shape([None, None, 3])

image = tf.image.resize(images=image, size=[IMAGE_SIZE, IMAGE_SIZE])

image = image / 127.5 - 1

return image

def load_data(image_list, mask_list):

image = read_image(image_list)

mask = read_image(mask_list, mask=True)

return image, mask

def data_generator(image_list, mask_list):

dataset = tf.data.Dataset.from_tensor_slices((image_list, mask_list))

dataset = dataset.map(load_data, num_parallel_calls=tf.data.AUTOTUNE)

dataset = dataset.batch(BATCH_SIZE, drop_remainder=True)

return dataset

train_dataset = data_generator(train_images, train_masks)

val_dataset = data_generator(val_images, val_masks)

print("Train Dataset:", train_dataset)

print("Val Dataset:", val_dataset)

Train Dataset:Val Dataset:

DeepLabV3+ モデルの構築

DeepLabv3+ はエンコーダ・デコーダ構造を追加することにより DeepLabv3 を拡張しています。エンコーダ・モジュールが複数のスケールで dilated 畳み込みを適用することによりマルチスケールのコンテキスト情報を処理する一方で、デコーダ・モジュールはオブジェクト境界に沿ってセグメンテーション結果を洗練します。

Dilated 畳み込み : dilated 畳み込みでは、ネットワークの深部に進むにつれて、パラメータ数や計算量を増やすことなく大きな視野を持ちながらストライドを一定に保つことができます。更に、より大きな出力特徴マップを可能にし、これはセマンティック・セグメンテーションのために有用です。

Dilated Spatial ピラミッドプーリング を使用する理由は、サンプリング・レートが大きくなるにつれて、有効なフィルタ重み (i.e. パディングされたゼロの代わりに、有効な特徴領域に適用される重み) の数が小さくなるころが示されたからです。

def convolution_block(

block_input,

num_filters=256,

kernel_size=3,

dilation_rate=1,

padding="same",

use_bias=False,

):

x = layers.Conv2D(

num_filters,

kernel_size=kernel_size,

dilation_rate=dilation_rate,

padding="same",

use_bias=use_bias,

kernel_initializer=keras.initializers.HeNormal(),

)(block_input)

x = layers.BatchNormalization()(x)

return tf.nn.relu(x)

def DilatedSpatialPyramidPooling(dspp_input):

dims = dspp_input.shape

x = layers.AveragePooling2D(pool_size=(dims[-3], dims[-2]))(dspp_input)

x = convolution_block(x, kernel_size=1, use_bias=True)

out_pool = layers.UpSampling2D(

size=(dims[-3] // x.shape[1], dims[-2] // x.shape[2]), interpolation="bilinear",

)(x)

out_1 = convolution_block(dspp_input, kernel_size=1, dilation_rate=1)

out_6 = convolution_block(dspp_input, kernel_size=3, dilation_rate=6)

out_12 = convolution_block(dspp_input, kernel_size=3, dilation_rate=12)

out_18 = convolution_block(dspp_input, kernel_size=3, dilation_rate=18)

x = layers.Concatenate(axis=-1)([out_pool, out_1, out_6, out_12, out_18])

output = convolution_block(x, kernel_size=1)

return output

エンコーダ特徴は最初に因子 4 で双線形にアップサンプリングされてから、同じ空間解像度を持つネットワーク・バックボーンからの対応する低レベル特徴と連結されます。この例については、バックボーン・モデルとして ImageNet で事前訓練された ResNet50 を使用します、そしてバックボーンの conv4_block6_2_relu ブロックからの低レベル特徴を使用します。

def DeeplabV3Plus(image_size, num_classes):

model_input = keras.Input(shape=(image_size, image_size, 3))

resnet50 = keras.applications.ResNet50(

weights="imagenet", include_top=False, input_tensor=model_input

)

x = resnet50.get_layer("conv4_block6_2_relu").output

x = DilatedSpatialPyramidPooling(x)

input_a = layers.UpSampling2D(

size=(image_size // 4 // x.shape[1], image_size // 4 // x.shape[2]),

interpolation="bilinear",

)(x)

input_b = resnet50.get_layer("conv2_block3_2_relu").output

input_b = convolution_block(input_b, num_filters=48, kernel_size=1)

x = layers.Concatenate(axis=-1)([input_a, input_b])

x = convolution_block(x)

x = convolution_block(x)

x = layers.UpSampling2D(

size=(image_size // x.shape[1], image_size // x.shape[2]),

interpolation="bilinear",

)(x)

model_output = layers.Conv2D(num_classes, kernel_size=(1, 1), padding="same")(x)

return keras.Model(inputs=model_input, outputs=model_output)

model = DeeplabV3Plus(image_size=IMAGE_SIZE, num_classes=NUM_CLASSES)

model.summary()

Downloading data from https://storage.googleapis.com/tensorflow/keras-applications/resnet/resnet50_weights_tf_dim_ordering_tf_kernels_notop.h5

94773248/94765736 [==============================] - 1s 0us/step

94781440/94765736 [==============================] - 1s 0us/step

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 512, 512, 3) 0

__________________________________________________________________________________________________

conv1_pad (ZeroPadding2D) (None, 518, 518, 3) 0 input_1[0][0]

__________________________________________________________________________________________________

conv1_conv (Conv2D) (None, 256, 256, 64) 9472 conv1_pad[0][0]

__________________________________________________________________________________________________

conv1_bn (BatchNormalization) (None, 256, 256, 64) 256 conv1_conv[0][0]

__________________________________________________________________________________________________

conv1_relu (Activation) (None, 256, 256, 64) 0 conv1_bn[0][0]

__________________________________________________________________________________________________

pool1_pad (ZeroPadding2D) (None, 258, 258, 64) 0 conv1_relu[0][0]

__________________________________________________________________________________________________

pool1_pool (MaxPooling2D) (None, 128, 128, 64) 0 pool1_pad[0][0]

__________________________________________________________________________________________________

conv2_block1_1_conv (Conv2D) (None, 128, 128, 64) 4160 pool1_pool[0][0]

__________________________________________________________________________________________________

conv2_block1_1_bn (BatchNormali (None, 128, 128, 64) 256 conv2_block1_1_conv[0][0]

__________________________________________________________________________________________________

conv2_block1_1_relu (Activation (None, 128, 128, 64) 0 conv2_block1_1_bn[0][0]

__________________________________________________________________________________________________

conv2_block1_2_conv (Conv2D) (None, 128, 128, 64) 36928 conv2_block1_1_relu[0][0]

__________________________________________________________________________________________________

conv2_block1_2_bn (BatchNormali (None, 128, 128, 64) 256 conv2_block1_2_conv[0][0]

__________________________________________________________________________________________________

conv2_block1_2_relu (Activation (None, 128, 128, 64) 0 conv2_block1_2_bn[0][0]

__________________________________________________________________________________________________

conv2_block1_0_conv (Conv2D) (None, 128, 128, 256 16640 pool1_pool[0][0]

__________________________________________________________________________________________________

conv2_block1_3_conv (Conv2D) (None, 128, 128, 256 16640 conv2_block1_2_relu[0][0]

__________________________________________________________________________________________________

conv2_block1_0_bn (BatchNormali (None, 128, 128, 256 1024 conv2_block1_0_conv[0][0]

__________________________________________________________________________________________________

conv2_block1_3_bn (BatchNormali (None, 128, 128, 256 1024 conv2_block1_3_conv[0][0]

__________________________________________________________________________________________________

conv2_block1_add (Add) (None, 128, 128, 256 0 conv2_block1_0_bn[0][0]

conv2_block1_3_bn[0][0]

__________________________________________________________________________________________________

conv2_block1_out (Activation) (None, 128, 128, 256 0 conv2_block1_add[0][0]

__________________________________________________________________________________________________

conv2_block2_1_conv (Conv2D) (None, 128, 128, 64) 16448 conv2_block1_out[0][0]

__________________________________________________________________________________________________

conv2_block2_1_bn (BatchNormali (None, 128, 128, 64) 256 conv2_block2_1_conv[0][0]

__________________________________________________________________________________________________

conv2_block2_1_relu (Activation (None, 128, 128, 64) 0 conv2_block2_1_bn[0][0]

__________________________________________________________________________________________________

conv2_block2_2_conv (Conv2D) (None, 128, 128, 64) 36928 conv2_block2_1_relu[0][0]

__________________________________________________________________________________________________

conv2_block2_2_bn (BatchNormali (None, 128, 128, 64) 256 conv2_block2_2_conv[0][0]

__________________________________________________________________________________________________

conv2_block2_2_relu (Activation (None, 128, 128, 64) 0 conv2_block2_2_bn[0][0]

__________________________________________________________________________________________________

conv2_block2_3_conv (Conv2D) (None, 128, 128, 256 16640 conv2_block2_2_relu[0][0]

__________________________________________________________________________________________________

conv2_block2_3_bn (BatchNormali (None, 128, 128, 256 1024 conv2_block2_3_conv[0][0]

__________________________________________________________________________________________________

conv2_block2_add (Add) (None, 128, 128, 256 0 conv2_block1_out[0][0]

conv2_block2_3_bn[0][0]

__________________________________________________________________________________________________

conv2_block2_out (Activation) (None, 128, 128, 256 0 conv2_block2_add[0][0]

__________________________________________________________________________________________________

conv2_block3_1_conv (Conv2D) (None, 128, 128, 64) 16448 conv2_block2_out[0][0]

__________________________________________________________________________________________________

conv2_block3_1_bn (BatchNormali (None, 128, 128, 64) 256 conv2_block3_1_conv[0][0]

__________________________________________________________________________________________________

conv2_block3_1_relu (Activation (None, 128, 128, 64) 0 conv2_block3_1_bn[0][0]

__________________________________________________________________________________________________

conv2_block3_2_conv (Conv2D) (None, 128, 128, 64) 36928 conv2_block3_1_relu[0][0]

__________________________________________________________________________________________________

conv2_block3_2_bn (BatchNormali (None, 128, 128, 64) 256 conv2_block3_2_conv[0][0]

__________________________________________________________________________________________________

conv2_block3_2_relu (Activation (None, 128, 128, 64) 0 conv2_block3_2_bn[0][0]

__________________________________________________________________________________________________

conv2_block3_3_conv (Conv2D) (None, 128, 128, 256 16640 conv2_block3_2_relu[0][0]

__________________________________________________________________________________________________

conv2_block3_3_bn (BatchNormali (None, 128, 128, 256 1024 conv2_block3_3_conv[0][0]

__________________________________________________________________________________________________

conv2_block3_add (Add) (None, 128, 128, 256 0 conv2_block2_out[0][0]

conv2_block3_3_bn[0][0]

__________________________________________________________________________________________________

conv2_block3_out (Activation) (None, 128, 128, 256 0 conv2_block3_add[0][0]

__________________________________________________________________________________________________

conv3_block1_1_conv (Conv2D) (None, 64, 64, 128) 32896 conv2_block3_out[0][0]

__________________________________________________________________________________________________

conv3_block1_1_bn (BatchNormali (None, 64, 64, 128) 512 conv3_block1_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block1_1_relu (Activation (None, 64, 64, 128) 0 conv3_block1_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block1_2_conv (Conv2D) (None, 64, 64, 128) 147584 conv3_block1_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block1_2_bn (BatchNormali (None, 64, 64, 128) 512 conv3_block1_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block1_2_relu (Activation (None, 64, 64, 128) 0 conv3_block1_2_bn[0][0]

__________________________________________________________________________________________________

conv3_block1_0_conv (Conv2D) (None, 64, 64, 512) 131584 conv2_block3_out[0][0]

__________________________________________________________________________________________________

conv3_block1_3_conv (Conv2D) (None, 64, 64, 512) 66048 conv3_block1_2_relu[0][0]

__________________________________________________________________________________________________

conv3_block1_0_bn (BatchNormali (None, 64, 64, 512) 2048 conv3_block1_0_conv[0][0]

__________________________________________________________________________________________________

conv3_block1_3_bn (BatchNormali (None, 64, 64, 512) 2048 conv3_block1_3_conv[0][0]

__________________________________________________________________________________________________

conv3_block1_add (Add) (None, 64, 64, 512) 0 conv3_block1_0_bn[0][0]

conv3_block1_3_bn[0][0]

__________________________________________________________________________________________________

conv3_block1_out (Activation) (None, 64, 64, 512) 0 conv3_block1_add[0][0]

__________________________________________________________________________________________________

conv3_block2_1_conv (Conv2D) (None, 64, 64, 128) 65664 conv3_block1_out[0][0]

__________________________________________________________________________________________________

conv3_block2_1_bn (BatchNormali (None, 64, 64, 128) 512 conv3_block2_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block2_1_relu (Activation (None, 64, 64, 128) 0 conv3_block2_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block2_2_conv (Conv2D) (None, 64, 64, 128) 147584 conv3_block2_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block2_2_bn (BatchNormali (None, 64, 64, 128) 512 conv3_block2_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block2_2_relu (Activation (None, 64, 64, 128) 0 conv3_block2_2_bn[0][0]

__________________________________________________________________________________________________

conv3_block2_3_conv (Conv2D) (None, 64, 64, 512) 66048 conv3_block2_2_relu[0][0]

__________________________________________________________________________________________________

conv3_block2_3_bn (BatchNormali (None, 64, 64, 512) 2048 conv3_block2_3_conv[0][0]

__________________________________________________________________________________________________

conv3_block2_add (Add) (None, 64, 64, 512) 0 conv3_block1_out[0][0]

conv3_block2_3_bn[0][0]

__________________________________________________________________________________________________

conv3_block2_out (Activation) (None, 64, 64, 512) 0 conv3_block2_add[0][0]

__________________________________________________________________________________________________

conv3_block3_1_conv (Conv2D) (None, 64, 64, 128) 65664 conv3_block2_out[0][0]

__________________________________________________________________________________________________

conv3_block3_1_bn (BatchNormali (None, 64, 64, 128) 512 conv3_block3_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block3_1_relu (Activation (None, 64, 64, 128) 0 conv3_block3_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block3_2_conv (Conv2D) (None, 64, 64, 128) 147584 conv3_block3_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block3_2_bn (BatchNormali (None, 64, 64, 128) 512 conv3_block3_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block3_2_relu (Activation (None, 64, 64, 128) 0 conv3_block3_2_bn[0][0]

__________________________________________________________________________________________________

conv3_block3_3_conv (Conv2D) (None, 64, 64, 512) 66048 conv3_block3_2_relu[0][0]

__________________________________________________________________________________________________

conv3_block3_3_bn (BatchNormali (None, 64, 64, 512) 2048 conv3_block3_3_conv[0][0]

__________________________________________________________________________________________________

conv3_block3_add (Add) (None, 64, 64, 512) 0 conv3_block2_out[0][0]

conv3_block3_3_bn[0][0]

__________________________________________________________________________________________________

conv3_block3_out (Activation) (None, 64, 64, 512) 0 conv3_block3_add[0][0]

__________________________________________________________________________________________________

conv3_block4_1_conv (Conv2D) (None, 64, 64, 128) 65664 conv3_block3_out[0][0]

__________________________________________________________________________________________________

conv3_block4_1_bn (BatchNormali (None, 64, 64, 128) 512 conv3_block4_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block4_1_relu (Activation (None, 64, 64, 128) 0 conv3_block4_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block4_2_conv (Conv2D) (None, 64, 64, 128) 147584 conv3_block4_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block4_2_bn (BatchNormali (None, 64, 64, 128) 512 conv3_block4_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block4_2_relu (Activation (None, 64, 64, 128) 0 conv3_block4_2_bn[0][0]

__________________________________________________________________________________________________

conv3_block4_3_conv (Conv2D) (None, 64, 64, 512) 66048 conv3_block4_2_relu[0][0]

__________________________________________________________________________________________________

conv3_block4_3_bn (BatchNormali (None, 64, 64, 512) 2048 conv3_block4_3_conv[0][0]

__________________________________________________________________________________________________

conv3_block4_add (Add) (None, 64, 64, 512) 0 conv3_block3_out[0][0]

conv3_block4_3_bn[0][0]

__________________________________________________________________________________________________

conv3_block4_out (Activation) (None, 64, 64, 512) 0 conv3_block4_add[0][0]

__________________________________________________________________________________________________

conv4_block1_1_conv (Conv2D) (None, 32, 32, 256) 131328 conv3_block4_out[0][0]

__________________________________________________________________________________________________

conv4_block1_1_bn (BatchNormali (None, 32, 32, 256) 1024 conv4_block1_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block1_1_relu (Activation (None, 32, 32, 256) 0 conv4_block1_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block1_2_conv (Conv2D) (None, 32, 32, 256) 590080 conv4_block1_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block1_2_bn (BatchNormali (None, 32, 32, 256) 1024 conv4_block1_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block1_2_relu (Activation (None, 32, 32, 256) 0 conv4_block1_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block1_0_conv (Conv2D) (None, 32, 32, 1024) 525312 conv3_block4_out[0][0]

__________________________________________________________________________________________________

conv4_block1_3_conv (Conv2D) (None, 32, 32, 1024) 263168 conv4_block1_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block1_0_bn (BatchNormali (None, 32, 32, 1024) 4096 conv4_block1_0_conv[0][0]

__________________________________________________________________________________________________

conv4_block1_3_bn (BatchNormali (None, 32, 32, 1024) 4096 conv4_block1_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block1_add (Add) (None, 32, 32, 1024) 0 conv4_block1_0_bn[0][0]

conv4_block1_3_bn[0][0]

__________________________________________________________________________________________________

conv4_block1_out (Activation) (None, 32, 32, 1024) 0 conv4_block1_add[0][0]

__________________________________________________________________________________________________

conv4_block2_1_conv (Conv2D) (None, 32, 32, 256) 262400 conv4_block1_out[0][0]

__________________________________________________________________________________________________

conv4_block2_1_bn (BatchNormali (None, 32, 32, 256) 1024 conv4_block2_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block2_1_relu (Activation (None, 32, 32, 256) 0 conv4_block2_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block2_2_conv (Conv2D) (None, 32, 32, 256) 590080 conv4_block2_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block2_2_bn (BatchNormali (None, 32, 32, 256) 1024 conv4_block2_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block2_2_relu (Activation (None, 32, 32, 256) 0 conv4_block2_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block2_3_conv (Conv2D) (None, 32, 32, 1024) 263168 conv4_block2_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block2_3_bn (BatchNormali (None, 32, 32, 1024) 4096 conv4_block2_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block2_add (Add) (None, 32, 32, 1024) 0 conv4_block1_out[0][0]

conv4_block2_3_bn[0][0]

__________________________________________________________________________________________________

conv4_block2_out (Activation) (None, 32, 32, 1024) 0 conv4_block2_add[0][0]

__________________________________________________________________________________________________

conv4_block3_1_conv (Conv2D) (None, 32, 32, 256) 262400 conv4_block2_out[0][0]

__________________________________________________________________________________________________

conv4_block3_1_bn (BatchNormali (None, 32, 32, 256) 1024 conv4_block3_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block3_1_relu (Activation (None, 32, 32, 256) 0 conv4_block3_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block3_2_conv (Conv2D) (None, 32, 32, 256) 590080 conv4_block3_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block3_2_bn (BatchNormali (None, 32, 32, 256) 1024 conv4_block3_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block3_2_relu (Activation (None, 32, 32, 256) 0 conv4_block3_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block3_3_conv (Conv2D) (None, 32, 32, 1024) 263168 conv4_block3_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block3_3_bn (BatchNormali (None, 32, 32, 1024) 4096 conv4_block3_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block3_add (Add) (None, 32, 32, 1024) 0 conv4_block2_out[0][0]

conv4_block3_3_bn[0][0]

__________________________________________________________________________________________________

conv4_block3_out (Activation) (None, 32, 32, 1024) 0 conv4_block3_add[0][0]

__________________________________________________________________________________________________

conv4_block4_1_conv (Conv2D) (None, 32, 32, 256) 262400 conv4_block3_out[0][0]

__________________________________________________________________________________________________

conv4_block4_1_bn (BatchNormali (None, 32, 32, 256) 1024 conv4_block4_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block4_1_relu (Activation (None, 32, 32, 256) 0 conv4_block4_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block4_2_conv (Conv2D) (None, 32, 32, 256) 590080 conv4_block4_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block4_2_bn (BatchNormali (None, 32, 32, 256) 1024 conv4_block4_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block4_2_relu (Activation (None, 32, 32, 256) 0 conv4_block4_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block4_3_conv (Conv2D) (None, 32, 32, 1024) 263168 conv4_block4_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block4_3_bn (BatchNormali (None, 32, 32, 1024) 4096 conv4_block4_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block4_add (Add) (None, 32, 32, 1024) 0 conv4_block3_out[0][0]

conv4_block4_3_bn[0][0]

__________________________________________________________________________________________________

conv4_block4_out (Activation) (None, 32, 32, 1024) 0 conv4_block4_add[0][0]

__________________________________________________________________________________________________

conv4_block5_1_conv (Conv2D) (None, 32, 32, 256) 262400 conv4_block4_out[0][0]

__________________________________________________________________________________________________

conv4_block5_1_bn (BatchNormali (None, 32, 32, 256) 1024 conv4_block5_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block5_1_relu (Activation (None, 32, 32, 256) 0 conv4_block5_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block5_2_conv (Conv2D) (None, 32, 32, 256) 590080 conv4_block5_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block5_2_bn (BatchNormali (None, 32, 32, 256) 1024 conv4_block5_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block5_2_relu (Activation (None, 32, 32, 256) 0 conv4_block5_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block5_3_conv (Conv2D) (None, 32, 32, 1024) 263168 conv4_block5_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block5_3_bn (BatchNormali (None, 32, 32, 1024) 4096 conv4_block5_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block5_add (Add) (None, 32, 32, 1024) 0 conv4_block4_out[0][0]

conv4_block5_3_bn[0][0]

__________________________________________________________________________________________________

conv4_block5_out (Activation) (None, 32, 32, 1024) 0 conv4_block5_add[0][0]

__________________________________________________________________________________________________

conv4_block6_1_conv (Conv2D) (None, 32, 32, 256) 262400 conv4_block5_out[0][0]

__________________________________________________________________________________________________

conv4_block6_1_bn (BatchNormali (None, 32, 32, 256) 1024 conv4_block6_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block6_1_relu (Activation (None, 32, 32, 256) 0 conv4_block6_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block6_2_conv (Conv2D) (None, 32, 32, 256) 590080 conv4_block6_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block6_2_bn (BatchNormali (None, 32, 32, 256) 1024 conv4_block6_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block6_2_relu (Activation (None, 32, 32, 256) 0 conv4_block6_2_bn[0][0]

__________________________________________________________________________________________________

average_pooling2d (AveragePooli (None, 1, 1, 256) 0 conv4_block6_2_relu[0][0]

__________________________________________________________________________________________________

conv2d (Conv2D) (None, 1, 1, 256) 65792 average_pooling2d[0][0]

__________________________________________________________________________________________________

batch_normalization (BatchNorma (None, 1, 1, 256) 1024 conv2d[0][0]

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 32, 32, 256) 65536 conv4_block6_2_relu[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 32, 32, 256) 589824 conv4_block6_2_relu[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 32, 32, 256) 589824 conv4_block6_2_relu[0][0]

__________________________________________________________________________________________________

conv2d_4 (Conv2D) (None, 32, 32, 256) 589824 conv4_block6_2_relu[0][0]

__________________________________________________________________________________________________

tf.nn.relu (TFOpLambda) (None, 1, 1, 256) 0 batch_normalization[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 32, 32, 256) 1024 conv2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 32, 32, 256) 1024 conv2d_2[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 32, 32, 256) 1024 conv2d_3[0][0]

__________________________________________________________________________________________________

batch_normalization_4 (BatchNor (None, 32, 32, 256) 1024 conv2d_4[0][0]

__________________________________________________________________________________________________

up_sampling2d (UpSampling2D) (None, 32, 32, 256) 0 tf.nn.relu[0][0]

__________________________________________________________________________________________________

tf.nn.relu_1 (TFOpLambda) (None, 32, 32, 256) 0 batch_normalization_1[0][0]

__________________________________________________________________________________________________

tf.nn.relu_2 (TFOpLambda) (None, 32, 32, 256) 0 batch_normalization_2[0][0]

__________________________________________________________________________________________________

tf.nn.relu_3 (TFOpLambda) (None, 32, 32, 256) 0 batch_normalization_3[0][0]

__________________________________________________________________________________________________

tf.nn.relu_4 (TFOpLambda) (None, 32, 32, 256) 0 batch_normalization_4[0][0]

__________________________________________________________________________________________________

concatenate (Concatenate) (None, 32, 32, 1280) 0 up_sampling2d[0][0]

tf.nn.relu_1[0][0]

tf.nn.relu_2[0][0]

tf.nn.relu_3[0][0]

tf.nn.relu_4[0][0]

__________________________________________________________________________________________________

conv2d_5 (Conv2D) (None, 32, 32, 256) 327680 concatenate[0][0]

__________________________________________________________________________________________________

batch_normalization_5 (BatchNor (None, 32, 32, 256) 1024 conv2d_5[0][0]

__________________________________________________________________________________________________

conv2d_6 (Conv2D) (None, 128, 128, 48) 3072 conv2_block3_2_relu[0][0]

__________________________________________________________________________________________________

tf.nn.relu_5 (TFOpLambda) (None, 32, 32, 256) 0 batch_normalization_5[0][0]

__________________________________________________________________________________________________

batch_normalization_6 (BatchNor (None, 128, 128, 48) 192 conv2d_6[0][0]

__________________________________________________________________________________________________

up_sampling2d_1 (UpSampling2D) (None, 128, 128, 256 0 tf.nn.relu_5[0][0]

__________________________________________________________________________________________________

tf.nn.relu_6 (TFOpLambda) (None, 128, 128, 48) 0 batch_normalization_6[0][0]

__________________________________________________________________________________________________

concatenate_1 (Concatenate) (None, 128, 128, 304 0 up_sampling2d_1[0][0]

tf.nn.relu_6[0][0]

__________________________________________________________________________________________________

conv2d_7 (Conv2D) (None, 128, 128, 256 700416 concatenate_1[0][0]

__________________________________________________________________________________________________

batch_normalization_7 (BatchNor (None, 128, 128, 256 1024 conv2d_7[0][0]

__________________________________________________________________________________________________

tf.nn.relu_7 (TFOpLambda) (None, 128, 128, 256 0 batch_normalization_7[0][0]

__________________________________________________________________________________________________

conv2d_8 (Conv2D) (None, 128, 128, 256 589824 tf.nn.relu_7[0][0]

__________________________________________________________________________________________________

batch_normalization_8 (BatchNor (None, 128, 128, 256 1024 conv2d_8[0][0]

__________________________________________________________________________________________________

tf.nn.relu_8 (TFOpLambda) (None, 128, 128, 256 0 batch_normalization_8[0][0]

__________________________________________________________________________________________________

up_sampling2d_2 (UpSampling2D) (None, 512, 512, 256 0 tf.nn.relu_8[0][0]

__________________________________________________________________________________________________

conv2d_9 (Conv2D) (None, 512, 512, 20) 5140 up_sampling2d_2[0][0]

==================================================================================================

Total params: 11,857,236

Trainable params: 11,824,500

Non-trainable params: 32,736

__________________________________________________________________________________________________

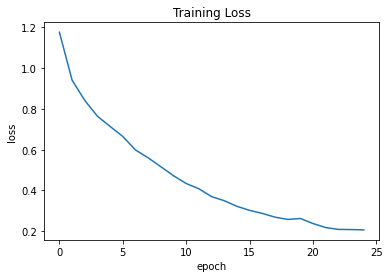

訓練

損失関数として sparse categorical crossentropy を、そして optimizer として Adam を使用してモデルを訓練します。

loss = keras.losses.SparseCategoricalCrossentropy(from_logits=True)

model.compile(

optimizer=keras.optimizers.Adam(learning_rate=0.001),

loss=loss,

metrics=["accuracy"],

)

history = model.fit(train_dataset, validation_data=val_dataset, epochs=25)

plt.plot(history.history["loss"])

plt.title("Training Loss")

plt.ylabel("loss")

plt.xlabel("epoch")

plt.show()

plt.plot(history.history["accuracy"])

plt.title("Training Accuracy")

plt.ylabel("accuracy")

plt.xlabel("epoch")

plt.show()

plt.plot(history.history["val_loss"])

plt.title("Validation Loss")

plt.ylabel("val_loss")

plt.xlabel("epoch")

plt.show()

plt.plot(history.history["val_accuracy"])

plt.title("Validation Accuracy")

plt.ylabel("val_accuracy")

plt.xlabel("epoch")

plt.show()

Epoch 1/25 250/250 [==============================] - 115s 359ms/step - loss: 1.1765 - accuracy: 0.6424 - val_loss: 2.3559 - val_accuracy: 0.5960 Epoch 2/25 250/250 [==============================] - 92s 366ms/step - loss: 0.9413 - accuracy: 0.6998 - val_loss: 1.7349 - val_accuracy: 0.5593 Epoch 3/25 250/250 [==============================] - 93s 371ms/step - loss: 0.8415 - accuracy: 0.7310 - val_loss: 1.3097 - val_accuracy: 0.6281 Epoch 4/25 250/250 [==============================] - 93s 372ms/step - loss: 0.7640 - accuracy: 0.7552 - val_loss: 1.0175 - val_accuracy: 0.6885 Epoch 5/25 250/250 [==============================] - 93s 372ms/step - loss: 0.7139 - accuracy: 0.7706 - val_loss: 1.2226 - val_accuracy: 0.6107 Epoch 6/25 250/250 [==============================] - 93s 373ms/step - loss: 0.6647 - accuracy: 0.7867 - val_loss: 0.8583 - val_accuracy: 0.7178 Epoch 7/25 250/250 [==============================] - 94s 375ms/step - loss: 0.5986 - accuracy: 0.8080 - val_loss: 0.9724 - val_accuracy: 0.7135 Epoch 8/25 250/250 [==============================] - 93s 372ms/step - loss: 0.5599 - accuracy: 0.8212 - val_loss: 0.9722 - val_accuracy: 0.7064 Epoch 9/25 250/250 [==============================] - 93s 372ms/step - loss: 0.5161 - accuracy: 0.8364 - val_loss: 0.9023 - val_accuracy: 0.7471 Epoch 10/25 250/250 [==============================] - 93s 373ms/step - loss: 0.4719 - accuracy: 0.8515 - val_loss: 0.8803 - val_accuracy: 0.7540 Epoch 11/25 250/250 [==============================] - 93s 372ms/step - loss: 0.4337 - accuracy: 0.8636 - val_loss: 0.9682 - val_accuracy: 0.7377 Epoch 12/25 250/250 [==============================] - 93s 373ms/step - loss: 0.4079 - accuracy: 0.8718 - val_loss: 0.9586 - val_accuracy: 0.7551 Epoch 13/25 250/250 [==============================] - 93s 373ms/step - loss: 0.3694 - accuracy: 0.8856 - val_loss: 0.9676 - val_accuracy: 0.7606 Epoch 14/25 250/250 [==============================] - 93s 373ms/step - loss: 0.3493 - accuracy: 0.8913 - val_loss: 0.8375 - val_accuracy: 0.7706 Epoch 15/25 250/250 [==============================] - 93s 373ms/step - loss: 0.3217 - accuracy: 0.9008 - val_loss: 0.9956 - val_accuracy: 0.7469 Epoch 16/25 250/250 [==============================] - 93s 372ms/step - loss: 0.3018 - accuracy: 0.9075 - val_loss: 0.9614 - val_accuracy: 0.7474 Epoch 17/25 250/250 [==============================] - 93s 372ms/step - loss: 0.2870 - accuracy: 0.9122 - val_loss: 0.9652 - val_accuracy: 0.7626 Epoch 18/25 250/250 [==============================] - 93s 373ms/step - loss: 0.2685 - accuracy: 0.9182 - val_loss: 0.8913 - val_accuracy: 0.7824 Epoch 19/25 250/250 [==============================] - 93s 373ms/step - loss: 0.2574 - accuracy: 0.9216 - val_loss: 1.0205 - val_accuracy: 0.7417 Epoch 20/25 250/250 [==============================] - 93s 372ms/step - loss: 0.2619 - accuracy: 0.9199 - val_loss: 0.9237 - val_accuracy: 0.7788 Epoch 21/25 250/250 [==============================] - 93s 372ms/step - loss: 0.2372 - accuracy: 0.9280 - val_loss: 0.9076 - val_accuracy: 0.7796 Epoch 22/25 250/250 [==============================] - 93s 372ms/step - loss: 0.2175 - accuracy: 0.9344 - val_loss: 0.9797 - val_accuracy: 0.7742 Epoch 23/25 250/250 [==============================] - 93s 372ms/step - loss: 0.2084 - accuracy: 0.9370 - val_loss: 0.9981 - val_accuracy: 0.7870 Epoch 24/25 250/250 [==============================] - 93s 373ms/step - loss: 0.2077 - accuracy: 0.9370 - val_loss: 1.0494 - val_accuracy: 0.7767 Epoch 25/25 250/250 [==============================] - 93s 372ms/step - loss: 0.2059 - accuracy: 0.9377 - val_loss: 0.9640 - val_accuracy: 0.7651

(訳注: 実験結果)

Epoch 1/25 250/250 [==============================] - 83s 236ms/step - loss: 1.1675 - accuracy: 0.6461 - val_loss: 3.1509 - val_accuracy: 0.5960 Epoch 2/25 250/250 [==============================] - 58s 232ms/step - loss: 0.9224 - accuracy: 0.7056 - val_loss: 1.7183 - val_accuracy: 0.5974 Epoch 3/25 250/250 [==============================] - 58s 230ms/step - loss: 0.8221 - accuracy: 0.7365 - val_loss: 1.4245 - val_accuracy: 0.5512 Epoch 4/25 250/250 [==============================] - 58s 230ms/step - loss: 0.7707 - accuracy: 0.7517 - val_loss: 1.1019 - val_accuracy: 0.6718 Epoch 5/25 250/250 [==============================] - 57s 230ms/step - loss: 0.7134 - accuracy: 0.7696 - val_loss: 0.8411 - val_accuracy: 0.7253 Epoch 6/25 250/250 [==============================] - 58s 231ms/step - loss: 0.6389 - accuracy: 0.7940 - val_loss: 0.9909 - val_accuracy: 0.6872 Epoch 7/25 250/250 [==============================] - 58s 231ms/step - loss: 0.5780 - accuracy: 0.8150 - val_loss: 0.9578 - val_accuracy: 0.7200 Epoch 8/25 250/250 [==============================] - 57s 230ms/step - loss: 0.5372 - accuracy: 0.8282 - val_loss: 1.1983 - val_accuracy: 0.6669 Epoch 9/25 250/250 [==============================] - 57s 230ms/step - loss: 0.5333 - accuracy: 0.8302 - val_loss: 0.8651 - val_accuracy: 0.7503 Epoch 10/25 250/250 [==============================] - 58s 231ms/step - loss: 0.4598 - accuracy: 0.8536 - val_loss: 0.8982 - val_accuracy: 0.7372 Epoch 11/25 250/250 [==============================] - 58s 231ms/step - loss: 0.4192 - accuracy: 0.8689 - val_loss: 0.8964 - val_accuracy: 0.7387 Epoch 12/25 250/250 [==============================] - 58s 232ms/step - loss: 0.3943 - accuracy: 0.8759 - val_loss: 0.8493 - val_accuracy: 0.7572 Epoch 13/25 250/250 [==============================] - 58s 231ms/step - loss: 0.3625 - accuracy: 0.8874 - val_loss: 0.9596 - val_accuracy: 0.7368 Epoch 14/25 250/250 [==============================] - 58s 231ms/step - loss: 0.3370 - accuracy: 0.8960 - val_loss: 1.0651 - val_accuracy: 0.7201 Epoch 15/25 250/250 [==============================] - 58s 231ms/step - loss: 0.3181 - accuracy: 0.9020 - val_loss: 0.9275 - val_accuracy: 0.7573 Epoch 16/25 250/250 [==============================] - 57s 229ms/step - loss: 0.3055 - accuracy: 0.9062 - val_loss: 0.9699 - val_accuracy: 0.7554 Epoch 17/25 250/250 [==============================] - 58s 231ms/step - loss: 0.2712 - accuracy: 0.9175 - val_loss: 0.9368 - val_accuracy: 0.7779 Epoch 18/25 250/250 [==============================] - 57s 230ms/step - loss: 0.2560 - accuracy: 0.9222 - val_loss: 0.9876 - val_accuracy: 0.7645 Epoch 19/25 250/250 [==============================] - 58s 230ms/step - loss: 0.2524 - accuracy: 0.9232 - val_loss: 0.9163 - val_accuracy: 0.7710 Epoch 20/25 250/250 [==============================] - 57s 230ms/step - loss: 0.2370 - accuracy: 0.9281 - val_loss: 0.9446 - val_accuracy: 0.7581 Epoch 21/25 250/250 [==============================] - 58s 231ms/step - loss: 0.2256 - accuracy: 0.9316 - val_loss: 0.8974 - val_accuracy: 0.7800 Epoch 22/25 250/250 [==============================] - 58s 232ms/step - loss: 0.2302 - accuracy: 0.9301 - val_loss: 0.9231 - val_accuracy: 0.7833 Epoch 23/25 250/250 [==============================] - 58s 232ms/step - loss: 0.2371 - accuracy: 0.9275 - val_loss: 0.9371 - val_accuracy: 0.7850 Epoch 24/25 250/250 [==============================] - 57s 229ms/step - loss: 0.2157 - accuracy: 0.9348 - val_loss: 1.0048 - val_accuracy: 0.7771 Epoch 25/25 250/250 [==============================] - 58s 232ms/step - loss: 0.2016 - accuracy: 0.9390 - val_loss: 0.9309 - val_accuracy: 0.7849

カラーマップ・オーバーレイを使用した推論

モデルからの raw 予測は shape (N, 512, 512, 20) の one-hot エンコードされたテンソルを表し、ここで 20 チャネルの各々は予測されたラベルに対応する二値マスクです。結果を可視化するため、それらを RGB セグメンテーション・マスクとしてプロットします、そこでは各ピクセルは予測された特定のラベルに対応する一意な色で表されます。データセットの一部として提供されている、human_colormap.mat ファイルから各ラベルに対応する色を簡単に見つけることができます。入力画像上で RGB セグメンテーション・マスクのオーバーレイをプロットすることもできます、画像にある異なるカテゴリーをより直感的に識別するのにこれは更に役立つからです。

# Loading the Colormap

colormap = loadmat(

"./instance-level_human_parsing/instance-level_human_parsing/human_colormap.mat"

)["colormap"]

colormap = colormap * 100

colormap = colormap.astype(np.uint8)

def infer(model, image_tensor):

predictions = model.predict(np.expand_dims((image_tensor), axis=0))

predictions = np.squeeze(predictions)

predictions = np.argmax(predictions, axis=2)

return predictions

def decode_segmentation_masks(mask, colormap, n_classes):

r = np.zeros_like(mask).astype(np.uint8)

g = np.zeros_like(mask).astype(np.uint8)

b = np.zeros_like(mask).astype(np.uint8)

for l in range(0, n_classes):

idx = mask == l

r[idx] = colormap[l, 0]

g[idx] = colormap[l, 1]

b[idx] = colormap[l, 2]

rgb = np.stack([r, g, b], axis=2)

return rgb

def get_overlay(image, colored_mask):

image = tf.keras.preprocessing.image.array_to_img(image)

image = np.array(image).astype(np.uint8)

overlay = cv2.addWeighted(image, 0.35, colored_mask, 0.65, 0)

return overlay

def plot_samples_matplotlib(display_list, figsize=(5, 3)):

_, axes = plt.subplots(nrows=1, ncols=len(display_list), figsize=figsize)

for i in range(len(display_list)):

if display_list[i].shape[-1] == 3:

axes[i].imshow(tf.keras.preprocessing.image.array_to_img(display_list[i]))

else:

axes[i].imshow(display_list[i])

plt.show()

def plot_predictions(images_list, colormap, model):

for image_file in images_list:

image_tensor = read_image(image_file)

prediction_mask = infer(image_tensor=image_tensor, model=model)

prediction_colormap = decode_segmentation_masks(prediction_mask, colormap, 20)

overlay = get_overlay(image_tensor, prediction_colormap)

plot_samples_matplotlib(

[image_tensor, overlay, prediction_colormap], figsize=(18, 14)

)

訓練画像上の推論

plot_predictions(train_images[:4], colormap, model=model)

検証画像上の推論

plot_predictions(val_images[:4], colormap, model=model)

以上