Keras 2 : examples : MIRNet による低照度画像強化 (翻訳/解説)

翻訳 : (株)クラスキャット セールスインフォメーション

作成日時 : 11/29/2021 (keras 2.7.0)

* 本ページは、Keras の以下のドキュメントを翻訳した上で適宜、補足説明したものです:

- Code examples : Computer Vision : Low-light image enhancement using MIRNet (Author: Soumik Rakshit)

* サンプルコードの動作確認はしておりますが、必要な場合には適宜、追加改変しています。

* ご自由にリンクを張って頂いてかまいませんが、sales-info@classcat.com までご一報いただけると嬉しいです。

- 人工知能研究開発支援

- 人工知能研修サービス(経営者層向けオンサイト研修)

- テクニカルコンサルティングサービス

- 実証実験(プロトタイプ構築)

- アプリケーションへの実装

- 人工知能研修サービス

- PoC(概念実証)を失敗させないための支援

- テレワーク & オンライン授業を支援

- お住まいの地域に関係なく Web ブラウザからご参加頂けます。事前登録 が必要ですのでご注意ください。

- ウェビナー運用には弊社製品「ClassCat® Webinar」を利用しています。

◆ お問合せ : 本件に関するお問い合わせ先は下記までお願いいたします。

- 株式会社クラスキャット セールス・マーケティング本部 セールス・インフォメーション

- E-Mail:sales-info@classcat.com ; WebSite: www.classcat.com ; Facebook

Keras 2 : examples : MIRNet による低照度画像強化

Description: 低照度画像強化のための MIRNet アーキテクチャを実装する。

イントロダクション

高品質画像コンテンツをその劣化したバージョンからリカバーする目的で、画像復元 (= restoration) は写真撮影、セキュリティ、医用画像処理、そしてリモートセンシングのような多くのアプリケーションを享受しています。このサンプルでは低照度画像強化のための MIRNet モデルを実装します、これは高解像度の空間的詳細を同時に保持しながら、マルチスケールからコンテキスト情報を組み合わせた豊かな特徴のセットを学習する完全畳み込みアーキテクチャです。

リファレンス :

- Learning Enriched Features for Real Image Restoration and Enhancement

- The Retinex Theory of Color Vision

- Two deterministic half-quadratic regularization algorithms for computed imaging

LOLDataset のダウンロード

LoL Dataset は低照度画像強化のために作成されました。それは訓練用に 485 画像そしてテスト用に 15 画像を提供しています。データセットの各画像ペアは低照度入力画像と対応する適正露出のリファレンス画像からなります。

import os

import cv2

import random

import numpy as np

from glob import glob

from PIL import Image, ImageOps

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

!gdown https://drive.google.com/uc?id=1DdGIJ4PZPlF2ikl8mNM9V-PdVxVLbQi6

!unzip -q lol_dataset.zip

Downloading... From: https://drive.google.com/uc?id=1DdGIJ4PZPlF2ikl8mNM9V-PdVxVLbQi6 To: /content/keras-io/scripts/tmp_2614641/lol_dataset.zip 347MB [00:03, 108MB/s]

TensorFlow Dataset を作成する

LoL Dataset の訓練セットから訓練用に 300 画像ペアを使用して、検証用に残りの 185 画像ペアを使用します。訓練と検証用に使用される画像ペアからサイズ 128 x 128 のランダムクロップを生成します。

random.seed(10)

IMAGE_SIZE = 128

BATCH_SIZE = 4

MAX_TRAIN_IMAGES = 300

def read_image(image_path):

image = tf.io.read_file(image_path)

image = tf.image.decode_png(image, channels=3)

image.set_shape([None, None, 3])

image = tf.cast(image, dtype=tf.float32) / 255.0

return image

def random_crop(low_image, enhanced_image):

low_image_shape = tf.shape(low_image)[:2]

low_w = tf.random.uniform(

shape=(), maxval=low_image_shape[1] - IMAGE_SIZE + 1, dtype=tf.int32

)

low_h = tf.random.uniform(

shape=(), maxval=low_image_shape[0] - IMAGE_SIZE + 1, dtype=tf.int32

)

enhanced_w = low_w

enhanced_h = low_h

low_image_cropped = low_image[

low_h : low_h + IMAGE_SIZE, low_w : low_w + IMAGE_SIZE

]

enhanced_image_cropped = enhanced_image[

enhanced_h : enhanced_h + IMAGE_SIZE, enhanced_w : enhanced_w + IMAGE_SIZE

]

return low_image_cropped, enhanced_image_cropped

def load_data(low_light_image_path, enhanced_image_path):

low_light_image = read_image(low_light_image_path)

enhanced_image = read_image(enhanced_image_path)

low_light_image, enhanced_image = random_crop(low_light_image, enhanced_image)

return low_light_image, enhanced_image

def get_dataset(low_light_images, enhanced_images):

dataset = tf.data.Dataset.from_tensor_slices((low_light_images, enhanced_images))

dataset = dataset.map(load_data, num_parallel_calls=tf.data.AUTOTUNE)

dataset = dataset.batch(BATCH_SIZE, drop_remainder=True)

return dataset

train_low_light_images = sorted(glob("./lol_dataset/our485/low/*"))[:MAX_TRAIN_IMAGES]

train_enhanced_images = sorted(glob("./lol_dataset/our485/high/*"))[:MAX_TRAIN_IMAGES]

val_low_light_images = sorted(glob("./lol_dataset/our485/low/*"))[MAX_TRAIN_IMAGES:]

val_enhanced_images = sorted(glob("./lol_dataset/our485/high/*"))[MAX_TRAIN_IMAGES:]

test_low_light_images = sorted(glob("./lol_dataset/eval15/low/*"))

test_enhanced_images = sorted(glob("./lol_dataset/eval15/high/*"))

train_dataset = get_dataset(train_low_light_images, train_enhanced_images)

val_dataset = get_dataset(val_low_light_images, val_enhanced_images)

print("Train Dataset:", train_dataset)

print("Val Dataset:", val_dataset)

Train Dataset: <BatchDataset shapes: ((4, None, None, 3), (4, None, None, 3)), types: (tf.float32, tf.float32)> Val Dataset: <BatchDataset shapes: ((4, None, None, 3), (4, None, None, 3)), types: (tf.float32, tf.float32)>

MIRNet モデル

ここに MIRNet モデルの主要な特徴があります :

- 正確な空間的詳細を保持するために元の高解像度の特徴を維持しながら、複数の空間的スケールに渡り特徴の補完的なセットを計算する特徴抽出モデルです。

- 情報交換のために定期的に反復されるメカニズムで、そこではマルチ解像度の分岐に渡る特徴は改善された表現学習のために一緒に漸進的に融合されます。

- 可変な受容野を動的に結合して各空間的解像度で元の特徴情報を忠実に保持する、選択的な (= selective) カーネルネットワークを使用したマルチスケールな特徴を融合する新しいアプローチ。

- 再帰的残差デザイン、これは学習プロセス全体を単純化するために入力信号を漸進的に分解し、そして非常に深いネットワークの構築を可能にします。

選択的カーネル特徴融合

選択的カーネル特徴融合 or SKFF (Selective Kernel Feature Fusion) モジュールは 2 つの演算: 融合 (Fuse) と 選択 (Select) を通して受容野動的な調整を実行します。Fuse (融合) 演算子はマルチ解像度ストリームからの情報を結合してグローバル特徴記述子を生成します。Select 演算子はこれらの記述子を使用して (異なるストリームの) 特徴マップを再調整 (= recalibrate) し、集約が続きます。

Fuse: SKFF は、異なるスケールの情報を運ぶ 3 つの並列畳み込みストリームから入力を受け取ります。最初に要素 wise の和を使用してこれらのマルチスケール特徴を結合して、その上で空間的次元に渡りグローバル平均プーリング (GAP) を適用します。次に、簡潔な (= compact) 特徴表現を生成するために channel- downscaling 畳み込み層を適用します、これは 3 つの並列 channel-upscaling 畳み込み層 (各解像度ストリームに対して 1 つ) を通過して 3 つの特徴記述子を提供します。

Select : この演算子は対応する活性を得るために特徴記述子に softmax 関数を適用します、これはマルチスケール特徴マップを適応的に再補正するために使用されます。集約された特徴は、対応するマルチスケール特徴と特徴記述子の積の和として定義されます。

def selective_kernel_feature_fusion(

multi_scale_feature_1, multi_scale_feature_2, multi_scale_feature_3

):

channels = list(multi_scale_feature_1.shape)[-1]

combined_feature = layers.Add()(

[multi_scale_feature_1, multi_scale_feature_2, multi_scale_feature_3]

)

gap = layers.GlobalAveragePooling2D()(combined_feature)

channel_wise_statistics = tf.reshape(gap, shape=(-1, 1, 1, channels))

compact_feature_representation = layers.Conv2D(

filters=channels // 8, kernel_size=(1, 1), activation="relu"

)(channel_wise_statistics)

feature_descriptor_1 = layers.Conv2D(

channels, kernel_size=(1, 1), activation="softmax"

)(compact_feature_representation)

feature_descriptor_2 = layers.Conv2D(

channels, kernel_size=(1, 1), activation="softmax"

)(compact_feature_representation)

feature_descriptor_3 = layers.Conv2D(

channels, kernel_size=(1, 1), activation="softmax"

)(compact_feature_representation)

feature_1 = multi_scale_feature_1 * feature_descriptor_1

feature_2 = multi_scale_feature_2 * feature_descriptor_2

feature_3 = multi_scale_feature_3 * feature_descriptor_3

aggregated_feature = layers.Add()([feature_1, feature_2, feature_3])

return aggregated_feature

デュアル・アテンション・ユニット

デュアル・アテンション・ユニット (二重注意ユニット) or DAU (Dual Attention Unit) は畳み込みストリーム内の特徴を抽出するために使用されます。SKFF ブロックがマルチ解像度分岐に渡り情報を融合する一方で、空間とチャネル次元の両方に沿った特徴テンソル内で情報を共有するメカニズムも必要です、これは DAU ブロックにより成されます。DAU はあまり有用でない特徴を抑制してより情報を持つものだけを更に通過させます。この特徴の再補正はチャネル注意 (= Channel Attention) と空間的注意 (= Spatial Attention) メカニズムを使用して実現されます。

Channel Attention 分岐は squeeze と excitation 演算子を適用して畳み込み特徴マップのチャネル間の関係を活用します。特徴マップが与えられたとき、squeeze 演算はグローバルコンテキストをエンコードするために空間次元に渡りグローバル平均プーリングを適用して、特徴記述子を生成します。excitation 演算子はこの特徴記述子を sigmoid ゲートが続く 2 つの畳み込み層を通して活性を生成します。最後に、Channel Attention 分岐の出力は、入力特徴マップを出力活性でリスケールして得られます。

Spatial Attention 分岐は畳み込み特徴の空間内の依存性を利用するために設計されています。Spatial Attention の目標は空間的注意マップを生成して、入力される (= incoming) 特徴を再補正するためにそれを使用することです。spatial attention マップを生成するため、Spatial Attention 分岐は最初に入力特徴にグローバル平均プーリングと Max 平均プーリングをチャネル次元に沿って独立的に適用して、結果としての特徴マップを形成するために出力を連結します、これは次に spatial attention マップを取得するために畳み込みと sigmoid 活性化を通されます。そしてこの spatial attention は入力特徴マップをリスケールするために使用されます。

def spatial_attention_block(input_tensor):

average_pooling = tf.reduce_max(input_tensor, axis=-1)

average_pooling = tf.expand_dims(average_pooling, axis=-1)

max_pooling = tf.reduce_mean(input_tensor, axis=-1)

max_pooling = tf.expand_dims(max_pooling, axis=-1)

concatenated = layers.Concatenate(axis=-1)([average_pooling, max_pooling])

feature_map = layers.Conv2D(1, kernel_size=(1, 1))(concatenated)

feature_map = tf.nn.sigmoid(feature_map)

return input_tensor * feature_map

def channel_attention_block(input_tensor):

channels = list(input_tensor.shape)[-1]

average_pooling = layers.GlobalAveragePooling2D()(input_tensor)

feature_descriptor = tf.reshape(average_pooling, shape=(-1, 1, 1, channels))

feature_activations = layers.Conv2D(

filters=channels // 8, kernel_size=(1, 1), activation="relu"

)(feature_descriptor)

feature_activations = layers.Conv2D(

filters=channels, kernel_size=(1, 1), activation="sigmoid"

)(feature_activations)

return input_tensor * feature_activations

def dual_attention_unit_block(input_tensor):

channels = list(input_tensor.shape)[-1]

feature_map = layers.Conv2D(

channels, kernel_size=(3, 3), padding="same", activation="relu"

)(input_tensor)

feature_map = layers.Conv2D(channels, kernel_size=(3, 3), padding="same")(

feature_map

)

channel_attention = channel_attention_block(feature_map)

spatial_attention = spatial_attention_block(feature_map)

concatenation = layers.Concatenate(axis=-1)([channel_attention, spatial_attention])

concatenation = layers.Conv2D(channels, kernel_size=(1, 1))(concatenation)

return layers.Add()([input_tensor, concatenation])

マルチスケール残差ブロック

マルチスケール残差ブロックは、低解像度から豊富なコンテキスト情報を受け取りながら、高解像度表現を保持することにより空間的に正確な出力を生成することができます。MRB は並列に接続された複数の (この論文では 3 つの) 完全畳み込みストリームから構成されます。それは高解像度特徴を低解像度特徴の手助けで (そしてその逆でも) 統合整理するために並列ストリームに渡り情報交換することを可能にします。MIRNet は学習プロセスの間情報の流れをスムースにするために再帰的な (スキップ接続を持つ) 残差デザインを用いています。アーキテクチャの残差的な性質を維持するために、マルチスケール残差ブロックで使用されるダウンサンプリングとアップサンプリング演算を実行するのに残差リサイズ・モジュールが使用されます。

# Recursive Residual Modules

def down_sampling_module(input_tensor):

channels = list(input_tensor.shape)[-1]

main_branch = layers.Conv2D(channels, kernel_size=(1, 1), activation="relu")(

input_tensor

)

main_branch = layers.Conv2D(

channels, kernel_size=(3, 3), padding="same", activation="relu"

)(main_branch)

main_branch = layers.MaxPooling2D()(main_branch)

main_branch = layers.Conv2D(channels * 2, kernel_size=(1, 1))(main_branch)

skip_branch = layers.MaxPooling2D()(input_tensor)

skip_branch = layers.Conv2D(channels * 2, kernel_size=(1, 1))(skip_branch)

return layers.Add()([skip_branch, main_branch])

def up_sampling_module(input_tensor):

channels = list(input_tensor.shape)[-1]

main_branch = layers.Conv2D(channels, kernel_size=(1, 1), activation="relu")(

input_tensor

)

main_branch = layers.Conv2D(

channels, kernel_size=(3, 3), padding="same", activation="relu"

)(main_branch)

main_branch = layers.UpSampling2D()(main_branch)

main_branch = layers.Conv2D(channels // 2, kernel_size=(1, 1))(main_branch)

skip_branch = layers.UpSampling2D()(input_tensor)

skip_branch = layers.Conv2D(channels // 2, kernel_size=(1, 1))(skip_branch)

return layers.Add()([skip_branch, main_branch])

# MRB Block

def multi_scale_residual_block(input_tensor, channels):

# features

level1 = input_tensor

level2 = down_sampling_module(input_tensor)

level3 = down_sampling_module(level2)

# DAU

level1_dau = dual_attention_unit_block(level1)

level2_dau = dual_attention_unit_block(level2)

level3_dau = dual_attention_unit_block(level3)

# SKFF

level1_skff = selective_kernel_feature_fusion(

level1_dau,

up_sampling_module(level2_dau),

up_sampling_module(up_sampling_module(level3_dau)),

)

level2_skff = selective_kernel_feature_fusion(

down_sampling_module(level1_dau), level2_dau, up_sampling_module(level3_dau)

)

level3_skff = selective_kernel_feature_fusion(

down_sampling_module(down_sampling_module(level1_dau)),

down_sampling_module(level2_dau),

level3_dau,

)

# DAU 2

level1_dau_2 = dual_attention_unit_block(level1_skff)

level2_dau_2 = up_sampling_module((dual_attention_unit_block(level2_skff)))

level3_dau_2 = up_sampling_module(

up_sampling_module(dual_attention_unit_block(level3_skff))

)

# SKFF 2

skff_ = selective_kernel_feature_fusion(level1_dau_2, level3_dau_2, level3_dau_2)

conv = layers.Conv2D(channels, kernel_size=(3, 3), padding="same")(skff_)

return layers.Add()([input_tensor, conv])

MIRNet モデル

def recursive_residual_group(input_tensor, num_mrb, channels):

conv1 = layers.Conv2D(channels, kernel_size=(3, 3), padding="same")(input_tensor)

for _ in range(num_mrb):

conv1 = multi_scale_residual_block(conv1, channels)

conv2 = layers.Conv2D(channels, kernel_size=(3, 3), padding="same")(conv1)

return layers.Add()([conv2, input_tensor])

def mirnet_model(num_rrg, num_mrb, channels):

input_tensor = keras.Input(shape=[None, None, 3])

x1 = layers.Conv2D(channels, kernel_size=(3, 3), padding="same")(input_tensor)

for _ in range(num_rrg):

x1 = recursive_residual_group(x1, num_mrb, channels)

conv = layers.Conv2D(3, kernel_size=(3, 3), padding="same")(x1)

output_tensor = layers.Add()([input_tensor, conv])

return keras.Model(input_tensor, output_tensor)

model = mirnet_model(num_rrg=3, num_mrb=2, channels=64)

訓練

- 損失関数として Charbonnier 損失 を、そして 1e-4 の学習率を使用して Adam Optimizer を使用して MIRNet を訓練します。

- メトリックとしては Peak Signal Noise Ratio (ピーク信号 (対) 雑音比) or PSNR を使用します、これは信号の最大可能値 (パワー) とその表現の品質に影響を与える歪んだノイズのパワーの間の比率のための式です。

def charbonnier_loss(y_true, y_pred):

return tf.reduce_mean(tf.sqrt(tf.square(y_true - y_pred) + tf.square(1e-3)))

def peak_signal_noise_ratio(y_true, y_pred):

return tf.image.psnr(y_pred, y_true, max_val=255.0)

optimizer = keras.optimizers.Adam(learning_rate=1e-4)

model.compile(

optimizer=optimizer, loss=charbonnier_loss, metrics=[peak_signal_noise_ratio]

)

history = model.fit(

train_dataset,

validation_data=val_dataset,

epochs=50,

callbacks=[

keras.callbacks.ReduceLROnPlateau(

monitor="val_peak_signal_noise_ratio",

factor=0.5,

patience=5,

verbose=1,

min_delta=1e-7,

mode="max",

)

],

)

plt.plot(history.history["loss"], label="train_loss")

plt.plot(history.history["val_loss"], label="val_loss")

plt.xlabel("Epochs")

plt.ylabel("Loss")

plt.title("Train and Validation Losses Over Epochs", fontsize=14)

plt.legend()

plt.grid()

plt.show()

plt.plot(history.history["peak_signal_noise_ratio"], label="train_psnr")

plt.plot(history.history["val_peak_signal_noise_ratio"], label="val_psnr")

plt.xlabel("Epochs")

plt.ylabel("PSNR")

plt.title("Train and Validation PSNR Over Epochs", fontsize=14)

plt.legend()

plt.grid()

plt.show()

Epoch 1/50 75/75 [==============================] - 109s 731ms/step - loss: 0.2125 - peak_signal_noise_ratio: 62.0458 - val_loss: 0.1592 - val_peak_signal_noise_ratio: 64.1833 Epoch 2/50 75/75 [==============================] - 49s 651ms/step - loss: 0.1764 - peak_signal_noise_ratio: 63.1356 - val_loss: 0.1257 - val_peak_signal_noise_ratio: 65.6498 Epoch 3/50 75/75 [==============================] - 49s 652ms/step - loss: 0.1724 - peak_signal_noise_ratio: 63.3172 - val_loss: 0.1245 - val_peak_signal_noise_ratio: 65.6902 Epoch 4/50 75/75 [==============================] - 49s 653ms/step - loss: 0.1670 - peak_signal_noise_ratio: 63.4917 - val_loss: 0.1206 - val_peak_signal_noise_ratio: 65.8893 Epoch 5/50 75/75 [==============================] - 49s 653ms/step - loss: 0.1651 - peak_signal_noise_ratio: 63.6555 - val_loss: 0.1333 - val_peak_signal_noise_ratio: 65.6338 Epoch 6/50 75/75 [==============================] - 49s 654ms/step - loss: 0.1572 - peak_signal_noise_ratio: 64.1984 - val_loss: 0.1142 - val_peak_signal_noise_ratio: 66.7711 Epoch 7/50 75/75 [==============================] - 49s 654ms/step - loss: 0.1592 - peak_signal_noise_ratio: 64.0062 - val_loss: 0.1205 - val_peak_signal_noise_ratio: 66.1075 Epoch 8/50 75/75 [==============================] - 49s 654ms/step - loss: 0.1493 - peak_signal_noise_ratio: 64.4675 - val_loss: 0.1170 - val_peak_signal_noise_ratio: 66.1355 Epoch 9/50 75/75 [==============================] - 49s 654ms/step - loss: 0.1446 - peak_signal_noise_ratio: 64.7416 - val_loss: 0.1301 - val_peak_signal_noise_ratio: 66.0207 Epoch 10/50 75/75 [==============================] - 49s 655ms/step - loss: 0.1539 - peak_signal_noise_ratio: 64.3999 - val_loss: 0.1220 - val_peak_signal_noise_ratio: 66.7203 Epoch 11/50 75/75 [==============================] - 49s 654ms/step - loss: 0.1451 - peak_signal_noise_ratio: 64.7352 - val_loss: 0.1219 - val_peak_signal_noise_ratio: 66.3140 Epoch 00011: ReduceLROnPlateau reducing learning rate to 4.999999873689376e-05. Epoch 12/50 75/75 [==============================] - 49s 651ms/step - loss: 0.1492 - peak_signal_noise_ratio: 64.7238 - val_loss: 0.1204 - val_peak_signal_noise_ratio: 66.4726 Epoch 13/50 75/75 [==============================] - 49s 651ms/step - loss: 0.1456 - peak_signal_noise_ratio: 64.9666 - val_loss: 0.1109 - val_peak_signal_noise_ratio: 67.1270 Epoch 14/50 75/75 [==============================] - 49s 651ms/step - loss: 0.1372 - peak_signal_noise_ratio: 65.3932 - val_loss: 0.1150 - val_peak_signal_noise_ratio: 66.9255 Epoch 15/50 75/75 [==============================] - 49s 650ms/step - loss: 0.1340 - peak_signal_noise_ratio: 65.5611 - val_loss: 0.1111 - val_peak_signal_noise_ratio: 67.2009 Epoch 16/50 75/75 [==============================] - 49s 651ms/step - loss: 0.1377 - peak_signal_noise_ratio: 65.3355 - val_loss: 0.1140 - val_peak_signal_noise_ratio: 67.0495 Epoch 17/50 75/75 [==============================] - 49s 651ms/step - loss: 0.1340 - peak_signal_noise_ratio: 65.6484 - val_loss: 0.1132 - val_peak_signal_noise_ratio: 67.0257 Epoch 18/50 75/75 [==============================] - 49s 651ms/step - loss: 0.1360 - peak_signal_noise_ratio: 65.4871 - val_loss: 0.1070 - val_peak_signal_noise_ratio: 67.4185 Epoch 19/50 75/75 [==============================] - 49s 649ms/step - loss: 0.1349 - peak_signal_noise_ratio: 65.4856 - val_loss: 0.1112 - val_peak_signal_noise_ratio: 67.2248 Epoch 20/50 75/75 [==============================] - 49s 651ms/step - loss: 0.1273 - peak_signal_noise_ratio: 66.0817 - val_loss: 0.1185 - val_peak_signal_noise_ratio: 67.0208 Epoch 21/50 75/75 [==============================] - 49s 656ms/step - loss: 0.1393 - peak_signal_noise_ratio: 65.3710 - val_loss: 0.1102 - val_peak_signal_noise_ratio: 67.0362 Epoch 22/50 75/75 [==============================] - 49s 653ms/step - loss: 0.1326 - peak_signal_noise_ratio: 65.8781 - val_loss: 0.1059 - val_peak_signal_noise_ratio: 67.4949 Epoch 23/50 75/75 [==============================] - 49s 653ms/step - loss: 0.1260 - peak_signal_noise_ratio: 66.1770 - val_loss: 0.1187 - val_peak_signal_noise_ratio: 66.6312 Epoch 24/50 75/75 [==============================] - 49s 650ms/step - loss: 0.1331 - peak_signal_noise_ratio: 65.8160 - val_loss: 0.1075 - val_peak_signal_noise_ratio: 67.2668 Epoch 25/50 75/75 [==============================] - 49s 654ms/step - loss: 0.1288 - peak_signal_noise_ratio: 66.0734 - val_loss: 0.1027 - val_peak_signal_noise_ratio: 67.9508 Epoch 26/50 75/75 [==============================] - 49s 654ms/step - loss: 0.1306 - peak_signal_noise_ratio: 66.0349 - val_loss: 0.1076 - val_peak_signal_noise_ratio: 67.3821 Epoch 27/50 75/75 [==============================] - 49s 655ms/step - loss: 0.1356 - peak_signal_noise_ratio: 65.7978 - val_loss: 0.1079 - val_peak_signal_noise_ratio: 67.4785 Epoch 28/50 75/75 [==============================] - 49s 655ms/step - loss: 0.1270 - peak_signal_noise_ratio: 66.2681 - val_loss: 0.1116 - val_peak_signal_noise_ratio: 67.3327 Epoch 29/50 75/75 [==============================] - 49s 654ms/step - loss: 0.1297 - peak_signal_noise_ratio: 66.0506 - val_loss: 0.1057 - val_peak_signal_noise_ratio: 67.5432 Epoch 30/50 75/75 [==============================] - 49s 654ms/step - loss: 0.1275 - peak_signal_noise_ratio: 66.3542 - val_loss: 0.1034 - val_peak_signal_noise_ratio: 67.4624 Epoch 00030: ReduceLROnPlateau reducing learning rate to 2.499999936844688e-05. Epoch 31/50 75/75 [==============================] - 49s 654ms/step - loss: 0.1258 - peak_signal_noise_ratio: 66.2724 - val_loss: 0.1066 - val_peak_signal_noise_ratio: 67.5729 Epoch 32/50 75/75 [==============================] - 49s 653ms/step - loss: 0.1153 - peak_signal_noise_ratio: 67.0384 - val_loss: 0.1064 - val_peak_signal_noise_ratio: 67.4336 Epoch 33/50 75/75 [==============================] - 49s 653ms/step - loss: 0.1189 - peak_signal_noise_ratio: 66.7662 - val_loss: 0.1062 - val_peak_signal_noise_ratio: 67.5128 Epoch 34/50 75/75 [==============================] - 49s 654ms/step - loss: 0.1159 - peak_signal_noise_ratio: 66.9257 - val_loss: 0.1003 - val_peak_signal_noise_ratio: 67.8672 Epoch 35/50 75/75 [==============================] - 49s 653ms/step - loss: 0.1191 - peak_signal_noise_ratio: 66.7690 - val_loss: 0.1043 - val_peak_signal_noise_ratio: 67.4840 Epoch 00035: ReduceLROnPlateau reducing learning rate to 1.249999968422344e-05. Epoch 36/50 75/75 [==============================] - 49s 651ms/step - loss: 0.1158 - peak_signal_noise_ratio: 67.0264 - val_loss: 0.1057 - val_peak_signal_noise_ratio: 67.6526 Epoch 37/50 75/75 [==============================] - 49s 652ms/step - loss: 0.1128 - peak_signal_noise_ratio: 67.1950 - val_loss: 0.1104 - val_peak_signal_noise_ratio: 67.1770 Epoch 38/50 75/75 [==============================] - 49s 652ms/step - loss: 0.1200 - peak_signal_noise_ratio: 66.7623 - val_loss: 0.1048 - val_peak_signal_noise_ratio: 67.7003 Epoch 39/50 75/75 [==============================] - 49s 651ms/step - loss: 0.1112 - peak_signal_noise_ratio: 67.3895 - val_loss: 0.1031 - val_peak_signal_noise_ratio: 67.6530 Epoch 40/50 75/75 [==============================] - 49s 650ms/step - loss: 0.1125 - peak_signal_noise_ratio: 67.1694 - val_loss: 0.1034 - val_peak_signal_noise_ratio: 67.6437 Epoch 00040: ReduceLROnPlateau reducing learning rate to 6.24999984211172e-06. Epoch 41/50 75/75 [==============================] - 49s 650ms/step - loss: 0.1131 - peak_signal_noise_ratio: 67.2471 - val_loss: 0.1152 - val_peak_signal_noise_ratio: 66.8625 Epoch 42/50 75/75 [==============================] - 49s 650ms/step - loss: 0.1069 - peak_signal_noise_ratio: 67.5794 - val_loss: 0.1119 - val_peak_signal_noise_ratio: 67.1944 Epoch 43/50 75/75 [==============================] - 49s 651ms/step - loss: 0.1118 - peak_signal_noise_ratio: 67.2779 - val_loss: 0.1147 - val_peak_signal_noise_ratio: 66.9731 Epoch 44/50 75/75 [==============================] - 48s 647ms/step - loss: 0.1101 - peak_signal_noise_ratio: 67.2777 - val_loss: 0.1107 - val_peak_signal_noise_ratio: 67.2580 Epoch 45/50 75/75 [==============================] - 49s 649ms/step - loss: 0.1076 - peak_signal_noise_ratio: 67.6359 - val_loss: 0.1103 - val_peak_signal_noise_ratio: 67.2720 Epoch 00045: ReduceLROnPlateau reducing learning rate to 3.12499992105586e-06. Epoch 46/50 75/75 [==============================] - 49s 648ms/step - loss: 0.1066 - peak_signal_noise_ratio: 67.4869 - val_loss: 0.1077 - val_peak_signal_noise_ratio: 67.4986 Epoch 47/50 75/75 [==============================] - 49s 649ms/step - loss: 0.1072 - peak_signal_noise_ratio: 67.4890 - val_loss: 0.1140 - val_peak_signal_noise_ratio: 67.1755 Epoch 48/50 75/75 [==============================] - 49s 649ms/step - loss: 0.1065 - peak_signal_noise_ratio: 67.6796 - val_loss: 0.1091 - val_peak_signal_noise_ratio: 67.3442 Epoch 49/50 75/75 [==============================] - 49s 648ms/step - loss: 0.1098 - peak_signal_noise_ratio: 67.3909 - val_loss: 0.1082 - val_peak_signal_noise_ratio: 67.4616 Epoch 50/50 75/75 [==============================] - 49s 648ms/step - loss: 0.1090 - peak_signal_noise_ratio: 67.5139 - val_loss: 0.1124 - val_peak_signal_noise_ratio: 67.1488 Epoch 00050: ReduceLROnPlateau reducing learning rate to 1.56249996052793e-06.

(訳者注: 実験結果)

Epoch 1/50 WARNING:tensorflow:AutoGraph could not transformand will run it as-is. Cause: Unable to locate the source code of . Note that functions defined in certain environments, like the interactive Python shell, do not expose their source code. If that is the case, you should define them in a .py source file. If you are certain the code is graph-compatible, wrap the call using @tf.autograph.experimental.do_not_convert. Original error: could not get source code To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert WARNING: AutoGraph could not transform and will run it as-is. Cause: Unable to locate the source code of . Note that functions defined in certain environments, like the interactive Python shell, do not expose their source code. If that is the case, you should define them in a .py source file. If you are certain the code is graph-compatible, wrap the call using @tf.autograph.experimental.do_not_convert. Original error: could not get source code To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert WARNING:tensorflow:AutoGraph could not transform and will run it as-is. Cause: Unable to locate the source code of . Note that functions defined in certain environments, like the interactive Python shell, do not expose their source code. If that is the case, you should define them in a .py source file. If you are certain the code is graph-compatible, wrap the call using @tf.autograph.experimental.do_not_convert. Original error: could not get source code To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert WARNING: AutoGraph could not transform and will run it as-is. Cause: Unable to locate the source code of . Note that functions defined in certain environments, like the interactive Python shell, do not expose their source code. If that is the case, you should define them in a .py source file. If you are certain the code is graph-compatible, wrap the call using @tf.autograph.experimental.do_not_convert. Original error: could not get source code To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert 75/75 [==============================] - 93s 612ms/step - loss: 0.1962 - peak_signal_noise_ratio: 62.4429 - val_loss: 0.1354 - val_peak_signal_noise_ratio: 65.4168 - lr: 1.0000e-04 Epoch 2/50 75/75 [==============================] - 41s 541ms/step - loss: 0.1767 - peak_signal_noise_ratio: 63.2681 - val_loss: 0.1215 - val_peak_signal_noise_ratio: 65.9509 - lr: 1.0000e-04 Epoch 3/50 75/75 [==============================] - 35s 470ms/step - loss: 0.1684 - peak_signal_noise_ratio: 63.6533 - val_loss: 0.1188 - val_peak_signal_noise_ratio: 66.2894 - lr: 1.0000e-04 Epoch 4/50 75/75 [==============================] - 41s 542ms/step - loss: 0.1710 - peak_signal_noise_ratio: 63.5991 - val_loss: 0.1176 - val_peak_signal_noise_ratio: 66.1768 - lr: 1.0000e-04 Epoch 5/50 75/75 [==============================] - 40s 538ms/step - loss: 0.1628 - peak_signal_noise_ratio: 63.8911 - val_loss: 0.1219 - val_peak_signal_noise_ratio: 65.8437 - lr: 1.0000e-04 Epoch 6/50 75/75 [==============================] - 36s 476ms/step - loss: 0.1603 - peak_signal_noise_ratio: 63.9455 - val_loss: 0.1204 - val_peak_signal_noise_ratio: 66.1169 - lr: 1.0000e-04 Epoch 7/50 75/75 [==============================] - 40s 540ms/step - loss: 0.1621 - peak_signal_noise_ratio: 63.9489 - val_loss: 0.1186 - val_peak_signal_noise_ratio: 66.1350 - lr: 1.0000e-04 Epoch 8/50 75/75 [==============================] - ETA: 0s - loss: 0.1599 - peak_signal_noise_ratio: 63.9998 Epoch 00008: ReduceLROnPlateau reducing learning rate to 4.999999873689376e-05. 75/75 [==============================] - 36s 474ms/step - loss: 0.1599 - peak_signal_noise_ratio: 63.9998 - val_loss: 0.1211 - val_peak_signal_noise_ratio: 65.9684 - lr: 1.0000e-04 Epoch 9/50 75/75 [==============================] - 41s 541ms/step - loss: 0.1523 - peak_signal_noise_ratio: 64.3761 - val_loss: 0.1111 - val_peak_signal_noise_ratio: 66.8115 - lr: 5.0000e-05 Epoch 10/50 75/75 [==============================] - 35s 472ms/step - loss: 0.1439 - peak_signal_noise_ratio: 64.7895 - val_loss: 0.1135 - val_peak_signal_noise_ratio: 66.6322 - lr: 5.0000e-05 Epoch 11/50 75/75 [==============================] - 35s 473ms/step - loss: 0.1436 - peak_signal_noise_ratio: 64.9855 - val_loss: 0.1151 - val_peak_signal_noise_ratio: 66.7188 - lr: 5.0000e-05 Epoch 12/50 75/75 [==============================] - 41s 542ms/step - loss: 0.1399 - peak_signal_noise_ratio: 65.0474 - val_loss: 0.1165 - val_peak_signal_noise_ratio: 66.7874 - lr: 5.0000e-05 Epoch 13/50 75/75 [==============================] - 40s 539ms/step - loss: 0.1429 - peak_signal_noise_ratio: 64.9748 - val_loss: 0.1118 - val_peak_signal_noise_ratio: 67.0432 - lr: 5.0000e-05 Epoch 14/50 75/75 [==============================] - 36s 477ms/step - loss: 0.1427 - peak_signal_noise_ratio: 65.0882 - val_loss: 0.1122 - val_peak_signal_noise_ratio: 67.1391 - lr: 5.0000e-05 Epoch 15/50 75/75 [==============================] - 40s 540ms/step - loss: 0.1452 - peak_signal_noise_ratio: 65.0278 - val_loss: 0.1175 - val_peak_signal_noise_ratio: 66.5287 - lr: 5.0000e-05 Epoch 16/50 75/75 [==============================] - 35s 473ms/step - loss: 0.1403 - peak_signal_noise_ratio: 65.3371 - val_loss: 0.1127 - val_peak_signal_noise_ratio: 67.0209 - lr: 5.0000e-05 Epoch 17/50 75/75 [==============================] - 36s 476ms/step - loss: 0.1374 - peak_signal_noise_ratio: 65.4539 - val_loss: 0.1063 - val_peak_signal_noise_ratio: 67.3760 - lr: 5.0000e-05 Epoch 18/50 75/75 [==============================] - 41s 542ms/step - loss: 0.1317 - peak_signal_noise_ratio: 65.7337 - val_loss: 0.1116 - val_peak_signal_noise_ratio: 67.1429 - lr: 5.0000e-05 Epoch 19/50 75/75 [==============================] - 35s 470ms/step - loss: 0.1356 - peak_signal_noise_ratio: 65.6993 - val_loss: 0.1098 - val_peak_signal_noise_ratio: 67.1781 - lr: 5.0000e-05 Epoch 20/50 75/75 [==============================] - 36s 475ms/step - loss: 0.1296 - peak_signal_noise_ratio: 65.9208 - val_loss: 0.1088 - val_peak_signal_noise_ratio: 67.4548 - lr: 5.0000e-05 Epoch 21/50 75/75 [==============================] - 35s 470ms/step - loss: 0.1310 - peak_signal_noise_ratio: 65.7405 - val_loss: 0.1079 - val_peak_signal_noise_ratio: 67.3210 - lr: 5.0000e-05 Epoch 22/50 75/75 [==============================] - 41s 541ms/step - loss: 0.1321 - peak_signal_noise_ratio: 65.7220 - val_loss: 0.1077 - val_peak_signal_noise_ratio: 67.6208 - lr: 5.0000e-05 Epoch 23/50 75/75 [==============================] - 41s 541ms/step - loss: 0.1321 - peak_signal_noise_ratio: 65.9508 - val_loss: 0.1049 - val_peak_signal_noise_ratio: 67.6112 - lr: 5.0000e-05 Epoch 24/50 75/75 [==============================] - 35s 471ms/step - loss: 0.1313 - peak_signal_noise_ratio: 65.8862 - val_loss: 0.1037 - val_peak_signal_noise_ratio: 67.5402 - lr: 5.0000e-05 Epoch 25/50 75/75 [==============================] - 35s 471ms/step - loss: 0.1354 - peak_signal_noise_ratio: 65.7297 - val_loss: 0.1084 - val_peak_signal_noise_ratio: 67.4549 - lr: 5.0000e-05 Epoch 26/50 75/75 [==============================] - 35s 470ms/step - loss: 0.1325 - peak_signal_noise_ratio: 65.7795 - val_loss: 0.1032 - val_peak_signal_noise_ratio: 67.6249 - lr: 5.0000e-05 Epoch 27/50 75/75 [==============================] - 36s 475ms/step - loss: 0.1299 - peak_signal_noise_ratio: 65.8841 - val_loss: 0.1046 - val_peak_signal_noise_ratio: 67.4232 - lr: 5.0000e-05 Epoch 28/50 75/75 [==============================] - 41s 542ms/step - loss: 0.1292 - peak_signal_noise_ratio: 65.9190 - val_loss: 0.1099 - val_peak_signal_noise_ratio: 67.4442 - lr: 5.0000e-05 Epoch 29/50 75/75 [==============================] - 41s 542ms/step - loss: 0.1266 - peak_signal_noise_ratio: 66.1769 - val_loss: 0.1058 - val_peak_signal_noise_ratio: 67.0410 - lr: 5.0000e-05 Epoch 30/50 75/75 [==============================] - 36s 477ms/step - loss: 0.1182 - peak_signal_noise_ratio: 66.5246 - val_loss: 0.0994 - val_peak_signal_noise_ratio: 68.0064 - lr: 5.0000e-05 Epoch 31/50 75/75 [==============================] - 40s 539ms/step - loss: 0.1372 - peak_signal_noise_ratio: 65.7111 - val_loss: 0.1099 - val_peak_signal_noise_ratio: 67.0264 - lr: 5.0000e-05 Epoch 32/50 75/75 [==============================] - 40s 541ms/step - loss: 0.1286 - peak_signal_noise_ratio: 66.0296 - val_loss: 0.1022 - val_peak_signal_noise_ratio: 67.6948 - lr: 5.0000e-05 Epoch 33/50 75/75 [==============================] - 41s 545ms/step - loss: 0.1253 - peak_signal_noise_ratio: 66.3000 - val_loss: 0.1050 - val_peak_signal_noise_ratio: 67.5943 - lr: 5.0000e-05 Epoch 34/50 75/75 [==============================] - 35s 472ms/step - loss: 0.1246 - peak_signal_noise_ratio: 66.3999 - val_loss: 0.1091 - val_peak_signal_noise_ratio: 67.1922 - lr: 5.0000e-05 Epoch 35/50 75/75 [==============================] - ETA: 0s - loss: 0.1270 - peak_signal_noise_ratio: 66.1083 Epoch 00035: ReduceLROnPlateau reducing learning rate to 2.499999936844688e-05. 75/75 [==============================] - 41s 541ms/step - loss: 0.1270 - peak_signal_noise_ratio: 66.1083 - val_loss: 0.0969 - val_peak_signal_noise_ratio: 67.9300 - lr: 5.0000e-05 Epoch 36/50 75/75 [==============================] - 35s 471ms/step - loss: 0.1247 - peak_signal_noise_ratio: 66.3227 - val_loss: 0.0989 - val_peak_signal_noise_ratio: 67.9348 - lr: 2.5000e-05 Epoch 37/50 75/75 [==============================] - 36s 475ms/step - loss: 0.1181 - peak_signal_noise_ratio: 66.7838 - val_loss: 0.1129 - val_peak_signal_noise_ratio: 66.7198 - lr: 2.5000e-05 Epoch 38/50 75/75 [==============================] - 35s 473ms/step - loss: 0.1167 - peak_signal_noise_ratio: 66.7812 - val_loss: 0.1010 - val_peak_signal_noise_ratio: 67.7690 - lr: 2.5000e-05 Epoch 39/50 75/75 [==============================] - 36s 474ms/step - loss: 0.1219 - peak_signal_noise_ratio: 66.5538 - val_loss: 0.1068 - val_peak_signal_noise_ratio: 67.1847 - lr: 2.5000e-05 Epoch 40/50 75/75 [==============================] - ETA: 0s - loss: 0.1172 - peak_signal_noise_ratio: 66.7736 Epoch 00040: ReduceLROnPlateau reducing learning rate to 1.249999968422344e-05. 75/75 [==============================] - 35s 472ms/step - loss: 0.1172 - peak_signal_noise_ratio: 66.7736 - val_loss: 0.1048 - val_peak_signal_noise_ratio: 67.5000 - lr: 2.5000e-05 Epoch 41/50 75/75 [==============================] - 35s 472ms/step - loss: 0.1133 - peak_signal_noise_ratio: 67.0603 - val_loss: 0.1098 - val_peak_signal_noise_ratio: 67.2724 - lr: 1.2500e-05 Epoch 42/50 75/75 [==============================] - 41s 543ms/step - loss: 0.1102 - peak_signal_noise_ratio: 67.2660 - val_loss: 0.0950 - val_peak_signal_noise_ratio: 68.3952 - lr: 1.2500e-05 Epoch 43/50 75/75 [==============================] - 41s 543ms/step - loss: 0.1136 - peak_signal_noise_ratio: 67.0640 - val_loss: 0.1014 - val_peak_signal_noise_ratio: 67.9000 - lr: 1.2500e-05 Epoch 44/50 75/75 [==============================] - 35s 472ms/step - loss: 0.1103 - peak_signal_noise_ratio: 67.3031 - val_loss: 0.0962 - val_peak_signal_noise_ratio: 68.2997 - lr: 1.2500e-05 Epoch 45/50 75/75 [==============================] - 35s 471ms/step - loss: 0.1151 - peak_signal_noise_ratio: 66.9287 - val_loss: 0.1050 - val_peak_signal_noise_ratio: 67.4930 - lr: 1.2500e-05 Epoch 46/50 75/75 [==============================] - 36s 474ms/step - loss: 0.1124 - peak_signal_noise_ratio: 67.1526 - val_loss: 0.1068 - val_peak_signal_noise_ratio: 67.4943 - lr: 1.2500e-05 Epoch 47/50 75/75 [==============================] - ETA: 0s - loss: 0.1100 - peak_signal_noise_ratio: 67.3251 Epoch 00047: ReduceLROnPlateau reducing learning rate to 6.24999984211172e-06. 75/75 [==============================] - 41s 541ms/step - loss: 0.1100 - peak_signal_noise_ratio: 67.3251 - val_loss: 0.1015 - val_peak_signal_noise_ratio: 67.9865 - lr: 1.2500e-05 Epoch 48/50 75/75 [==============================] - 40s 540ms/step - loss: 0.1114 - peak_signal_noise_ratio: 67.2343 - val_loss: 0.1008 - val_peak_signal_noise_ratio: 67.8670 - lr: 6.2500e-06 Epoch 49/50 75/75 [==============================] - 35s 473ms/step - loss: 0.1092 - peak_signal_noise_ratio: 67.3003 - val_loss: 0.1042 - val_peak_signal_noise_ratio: 67.6516 - lr: 6.2500e-06 Epoch 50/50 75/75 [==============================] - 35s 470ms/step - loss: 0.1058 - peak_signal_noise_ratio: 67.5498 - val_loss: 0.1034 - val_peak_signal_noise_ratio: 67.6350 - lr: 6.2500e-06 CPU times: user 33min 51s, sys: 3min 22s, total: 37min 13s Wall time: 35min 20s

推論

def plot_results(images, titles, figure_size=(12, 12)):

fig = plt.figure(figsize=figure_size)

for i in range(len(images)):

fig.add_subplot(1, len(images), i + 1).set_title(titles[i])

_ = plt.imshow(images[i])

plt.axis("off")

plt.show()

def infer(original_image):

image = keras.preprocessing.image.img_to_array(original_image)

image = image.astype("float32") / 255.0

image = np.expand_dims(image, axis=0)

output = model.predict(image)

output_image = output[0] * 255.0

output_image = output_image.clip(0, 255)

output_image = output_image.reshape(

(np.shape(output_image)[0], np.shape(output_image)[1], 3)

)

output_image = Image.fromarray(np.uint8(output_image))

original_image = Image.fromarray(np.uint8(original_image))

return output_image

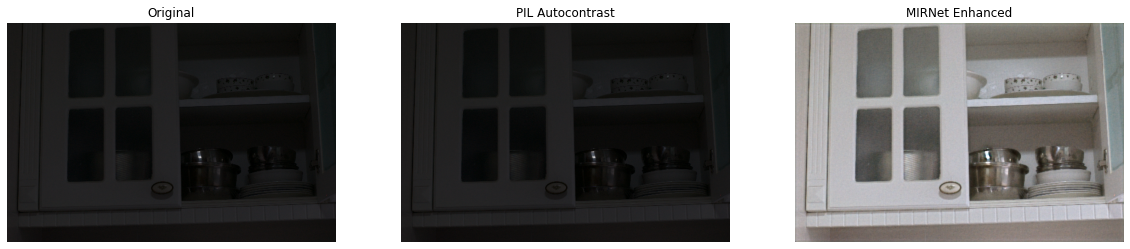

テスト画像上の推論

MIRNet により強化された LOLDataset からのテスト画像を PIL.ImageOps.autocontrast() 関数を通して強化された画像と比較します。

for low_light_image in random.sample(test_low_light_images, 6):

original_image = Image.open(low_light_image)

enhanced_image = infer(original_image)

plot_results(

[original_image, ImageOps.autocontrast(original_image), enhanced_image],

["Original", "PIL Autocontrast", "MIRNet Enhanced"],

(20, 12),

)

以上